mrinfo

In the late 1980s, the developers of IP Multicast designed the MBone, an overlay network composed of tunnels that interconnected workstations running an implementation of the Distance Vector Multicast Routing Protocol (DVMRP). Several tools have been developed to monitor and debug the MBone. Most of these tools have been deprecated with the replacement of DVMRP by the Protocol Independent Multicast (PIM) family of multicast routing protocols with one notable exception: mrinfo.

mrinfo is a fascinating probing tool since it natively comes with a router level vision and also provides an L2 aware vision (see our IMC 2010 paper and our L2L3 bipartite graph analysis). Furthermore, it is not forwarding dependent such as traceroute and does not suffer from the same limitations (IMC 2009).

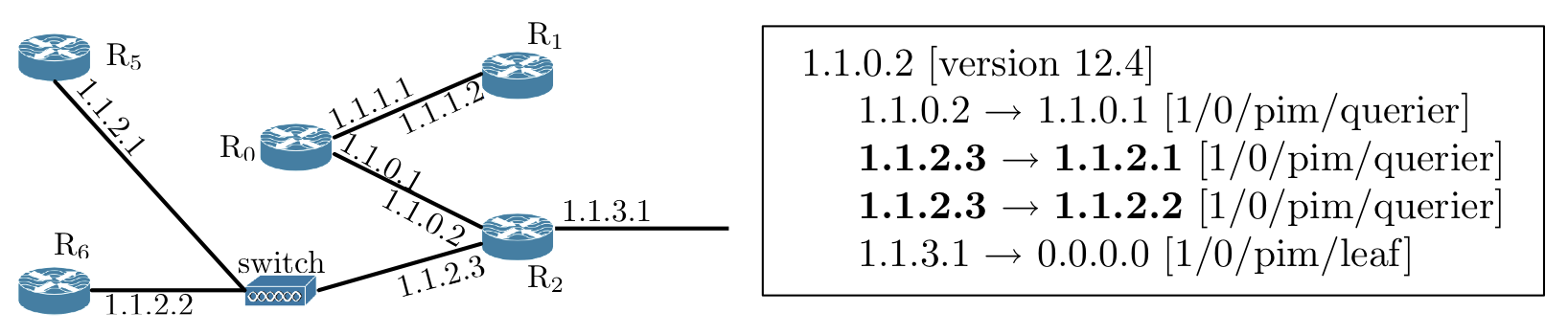

Fig.1: IGMP probing output

Fig. 1 shows an example of the usage of mrinfo to query the router R2, 1.1.0.2 being the responding interface of R2. mrinfo reports that this router is directly connected to R0 (through interface 1.1.0.1). We can also notice that R0 is connected to routers R5 and R6 through an L2 network (labeled "switch" in Fig. 1) because interface 1.1.2.3 appears twice in the mrinfo reply (see bold text in Fig. 1). Finally, mrinfo reports that interface 1.1.3.1 has no multicast neighbor because the right IP address is equal to 0.0.0.0 (or is directly connected to a LAN, as indicated by the "leaf" keyword). All this information is obtained by sending a single IGMP message. In practice, mrinfo provides information similar to the output of a show command on the router's command line interface.

mrinfo-rec

Our initial approach in probing the network with mrinfo is recursive and we call such a probing scheme mrinfo-rec (see IMC 2009, IMC 2010, and IMC 2010). Initially, mrinfo-rec is fed with a single IP address corresponding to the first router attached to the mrinfo-rec vantage point. mrinfo-rec probes this router and recursively applies its probing mechanism on all the collected IP addresses. These recursive queries stop at unresponsive routers or when all discovered routers have been queried. The same process is run every day. It is worth to notice that an address not replying to an IGMP probe during a given day will not be queried the days after except if it appears again in a list of captured addresses.

To illustrate this behavior, let us apply it on the topology depicted in Fig. 1. mrinfo-rec receives, as input, an IP address belonging to router R0. From R0, mrinfo-rec collects a set of neighbor IP addresses, i.e., {1.1.1.2, 1.1.0.2}. For all IP addresses in this set that were not previously probed, mrinfo-rec sends an IGMP ASK_NEIGHBORS message and, if the probed router replied, it again runs through the set of neighbor IP addresses collected.

This recursive probing scheme comes with the strong advantage of being very easy to setup as it initially requires only a single IP address as input. However, it has the drawback of limiting the mrinfo-rec probing spectrum. If the address set specified as input for a given day corresponds to non-responding routers (because, simply, the ISP filters IGMP messages), mrinfo-rec will not be able to discover any topological information. To overcome this limitation, the set of responding router addresses of a given day is given as input of mrinfo-rec the next day. Datasets related to mrinfo-rec are available here (global data on a daily basis) and here (per AS data on a per month basis). More information are available here (UdS) and here (UCL). We also develop an efficient router-to-AS algorithm to extract intra-domain graphs and mark boundaries between AS (take a look to the PAM 2010 paper and the related code).

However, mrinfo (and by extension mrinfo-rec) suffers from several drawbacks. First, its view is limited to multicast components and, in the same way that ICMP messages may be rate limited or filtered for traceroute probing, IGMP messages can be filtered by some ISPs. Second, we observed several technical limitations: it suffers from an IP fragmentation issue and the lack of multiplexing support.

MERLIN

In order to fix those issues (at least a maximum of them), we implemented a new tool, still based on IGMP probing, called MEasure the Router Level of the INternet (MERLIN). MERLIN is a distributed platform and allows one to infer the multicast map of the Internet at the router level and is designed for large scale topology discovery campaigns.

The objective of MERLIN is to collect an accurate router-level topology snapshot of a targeted AS by combining IGMP probing, traceroute, and an alias resolution technique. More precisely, the first challenge of MERLIN is to use traceroute seeds in order to improve its probing coverage by overcoming the limitations of a pure IGMP recursion scheme. Then, the use of an alias resolution technique and traceroute allows us to provide consistent ISP maps by reconstructing a connected topologies.

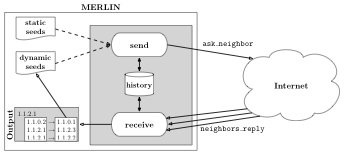

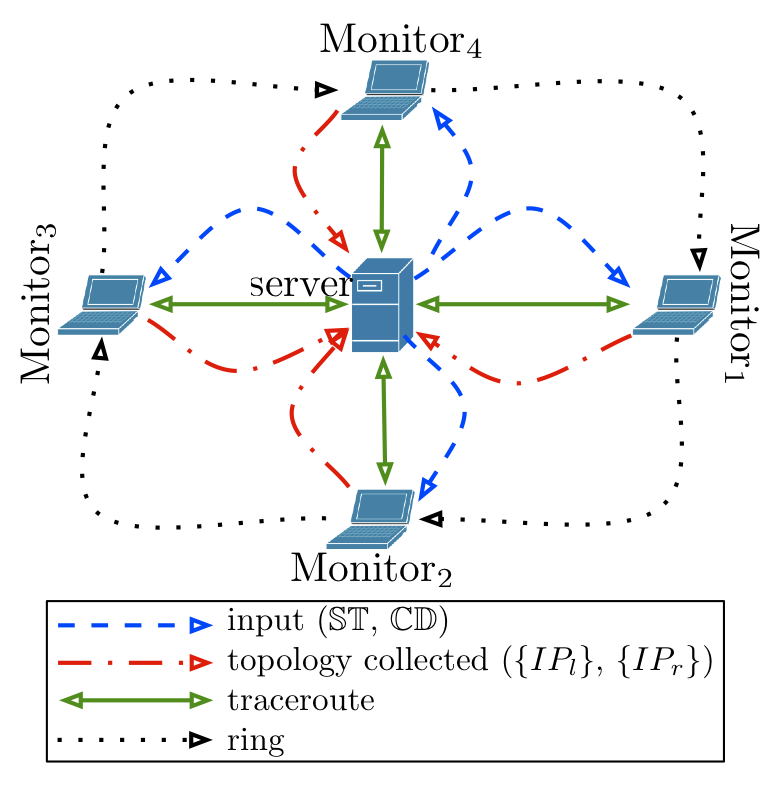

Fig.2: The MERLIN platform

MERLIN is based on a centralized client-server architecture and runs over Unix platforms. A central server (JSAC 2011), written in Python and C++, controls the vantage points, called monitors, written in C. The MERLIN monitors are potentially spread all over the world and logically organized as a ring, as illustrated in Fig. 2. Only one monitor actively probes the targeted domain at a given time. This probing period by a single monitor is called run. There are as many runs as monitors in the system per probing cycle. A cycle is completed when all monitors have probed the targeted domain during a given round around the monitor ring. Note that, for a given targeted AS, several cycles can be required per IGMP probing stage, i.e., the number of cycles required to take advantage of all current seeds including those recursively found during previous runs. An IGMP probing stage might be made of an incomplete number of cycles: a stage stops when no more topological data can be collected using the current seed set. In order to increase the coverage of an AS probing campaign, between each stage, MERLIN launches internal Paris traceroute campaigns to collect new fresh seeds and better understand the topology lacks.

The input sent by the server to each monitor is composed of a list of IP addresses called current seeds (CD in Fig. 2). This set is made of previously collected mrinfo-rec data, external traceroute collected by Archipelago, and initial traceroutes run by the server.

Each input list is subject to a standard IP-to-AS mapping filtering process so that only IP addresses mapped to the targeted AS are used. Each monitor probes the network recursively (see below for details). For "controlling" this recursion and then avoiding inter-monitor redundancy, the server computes a list of IP addresses, called the Stop Set (ST in Fig. 2). This list contains IP addresses that have been previously discovered by MERLIN monitors and, thus, do not have to be probed again.

Fig. 3 depicts the architecture of the MERLIN monitor (NGI 2011). The monitor is composed of two parallel processes: send, in charge of sending probes to the network, and receive, in charge of processing the replies returned back by the routers. To minimize redundancy, the sending process never probes an IP address previously discovered: the "history" box in Fig. 3 is initialized with the Stop Set and is maintained up-to-date by the monitor, avoiding so the redundancy in probing.

The send process is fed by both a static IP address list and a dynamic IP address list collected from replies. This dynamic list is used for recursion. When the monitor starts, the send process operates in a static mode using so IP addresses from the static list. Once replies are collected from the receive process, the dynamic list is built based on publicly routable neighbor IP addresses: the recursion is engaged. The send process enters in the dynamic mode and gives priority to targets of the dynamic list. Each time the dynamic list becomes empty (i.e., the current recursion is finished), the send process is again fed with remaining IP addresses from the static list. This recursion first scheme was a design choice that has been made in order to minimize the probability of reprobing a given router.

We provide two datasets based on MERLIN: one targets the whole Internet while the other one targets a subset of specifics ASes.

Multicast Core Reconstruction

MERLIN could suffer from the multicast graph "disconnection state" due to IGMP filtering: some multicast routers do not reply to IGMP probes (local filtering) while some other do not forward IGMP queries (transit filtering). While the second problem can be somehow overcome with the use of multiple vantage points in a cooperative distributed platform, the first one is more challenging as it impacts the collected topologies. Indeed, multicast routers that do not respond to IGMP probes may divide the resulting collected multicast graph into disjoint components.

Assuming that the "globally accessible" multicast graph is physically connected, we can expect that scattered components and isolated routers result from non-responding multicast routers (these routers might be partially seen at the IP level through neighborhood information of IGMP compliant routers). Indeed, even a low proportion of non-responding routers may result in an huge disconnection of the multicast graph. This leads to a situation in which discovered multicast maps are, actually, a set of disconnected components.

This "disjoint state" may be exacerbate by unicast adjacencies lacks. In practice, a multicast router can be configured at the interface granularity: each interface can independently support multicast or not. Nevertheless, an ISP supporting IP multicast should enable multicast everywhere in its network to ensure the correct PIM tree establishment. Some exceptions may arise at inter-area border routers and AS border routers. An area border router does not need to support multicast adjacencies with routers belonging to non-multicast areas. Between ASes, the BGP routing protocol can use specific multicast forwarding entries to disseminate PIM messages. Thus, although it is likely that a multicast border router will not enable multicast on all its interfaces, it is also likely that the multicast graph should be connected. Even in presence of non multicast adjacencies, there should exist at least one multicast path between each multicast component. Despite this reasonable assumption, it is also true that the connectivity of the multicast graph can be lower than the physical one (including unicast-only components).

Therefore, one of the objective beyond MERLIN is to merge the maximum possible number of disjoint IGMP components into a large one. For that purpose, after an IGMP probing phase, we use traceroute like exploration and alias resolution: IP level links and aliased IP addresses - forming so routers - fill the gap among disjoint components discovered during IGMP probing.

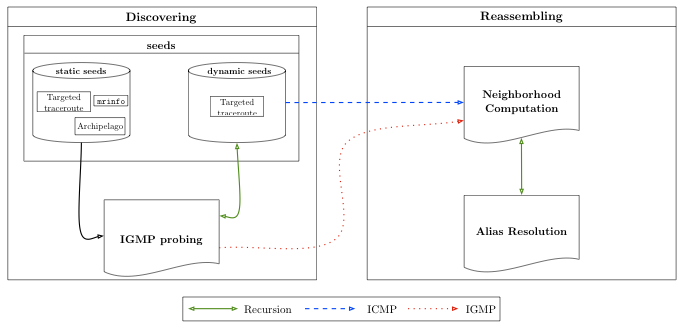

Fig.4: The whole MERLIN process

Fig. 4 summarizes the whole topology collection process. Two steps are required: Discovering and Reassembling.

The Discovering step is based on IGMP probing with MERLIN, as explained above. Once the IGMP router level topology has been collected, we have a scattered topology made of disjoint IGMP components,

The next step, Reassembling, aims at reconnecting the IGMP components in order to, at best, obtained a single large and highly connected component. Using IP level links discovered with our traceroutes between distinct IGMP components, the alias resolution step can start. The main challenge here is to identify IP addresses pairs that are good candidates for alias resolution in order to efficiently expand IGMP components and so reassemble them. We do not want to test all possible pairs: only selected candidate IP addresses pairs (using a Neighborhood Computation) are tested using a standard alias resolution technique, Ally (note that our reassembling process can work with any alias resolution technique). The alias resolution recursion continues until no new candidates are found. At the end of the process, we can expect to achieve our goal: providing a single large and highly connected graph at the router level.

Reconnected graphs are available here.